What is with Excel Tables and the Data Model? Believe it or not, this is not the question I started with today, it was actually "which is faster; loading from CSV files or Excel?" My initial results actually brought up a surprising - and very different - question, which has become the subject of this post.

The testing stage:

Let's start by setting the background of my test setup…

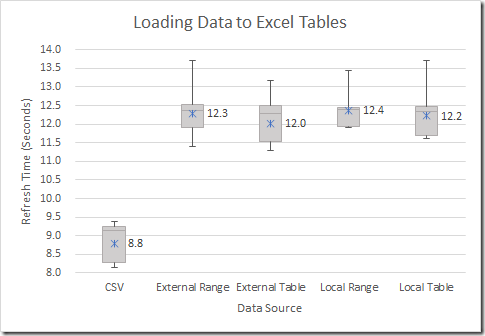

What does the test data look like?

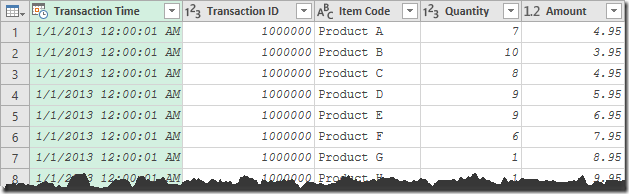

I started by wanting to test the difference in load speeds between data stored in an Excel table and a CSV. To do that, I started with a CSV file with 1,044,000 rows of data, which look like this:

What does my test query actually do?

The query to collect this data only has a few steps:

- Connect to the data source

- Promote headers (if needed)

- Set data types

- Load to the Data Model

Nothing fancy, and virtually no transformations.

Scenarios tested:

I decided to load the data into the Data Model, as I figured that would be fastest. And during testing, I decided to expand the locations from which I was pulling the source data. What I ended up testing for the data source (using the same data) were:

- A table in the same workbook

- A named range in the same workbook

- A CSV file

- A table in a different workbook

- A named range in a different workbook

And just for full transparency here, I turned Privacy settings off, as well as turned on Fast Data Load, trying to get the best performance possible. (I wanted to run the tests multiple times, and hate waiting…)

Your turn to play along…

All right, enough about the test setup, let's get into this.

Just for fun, which do you think would be the fastest of these to load the data to the Data Model? Try ranking them as to what you expect would be the best performing to worst performing. I.e. which will refresh the quickest, and which would refresh the slowest?

For me, I expected that order to be exactly what I actually listed above. My thoughts are that data within the workbook would be "closest" and therefore perform better since Excel already knows about it. I'd expect the table to be more efficient than the range, since Excel already knows the table's data structure. But I could see CSV having less overhead than an external file, since there are less parts to a CSV file than an Excel file.

And now for the great reveal!

These were generated by averaging the refresh times of 10 refreshes, excluding the initial refresh. (I wanted a refresh, not the overhead of creating the model.) I shut down all other applications, paused all file syncing, and did nothing else on the PC while the timing tests were running. The main reason is that I didn't want anything impacting the tests from an external process.

Okay, I hear you… "what am I seeing here?" It's a Box & Whisker plot, intended to show some statistics about the refresh times. It measures the standard deviations of the refresh times, and the boxes show the 2nd and 3rd quartiles. The whiskers show the variance for the other times. The fact that you can barely see those tells you that there wasn't a ton of significant variation in the testing times. To make it a bit easier to see the impact, I also added data labels to show the mean refresh time for each data source in seconds.

So basically the time to refresh 1,044,000 rows breaks down like this:

- Pulling from CSV was fastest at 8.1 seconds

- Pulling from a table in a different Excel file took 11.5 seconds

- Pulling from a regular range in a different Excel file took 11.8 seconds

And then we hit the stuff that is pulling from a named range in the current Excel file (67.3 seconds), and finally, pulling up the tail end of this performance test, is pulling data from a local Excel table into the Data Model at 67.5 seconds.

I even changed the order the queries refreshed, (not included in the plotted data set,) but still no noticeable difference.

Wow. Just wow.

Let's be honest, the table vs range is a negligible performance variance. At 0.2 to 0.3 seconds, I'd just call those the same. I'll even buy that pulling from a CSV is quicker than from an external Excel workbook. Less structure, less overhead, that makes sense to me.

But the rest… what is going on there? That's CRAZY slow. Why would it be almost 6 times slower to grab data from the file you already have open instead of grabbing it from an external source? That's mind boggling to me.

Is there a Data Model impact?

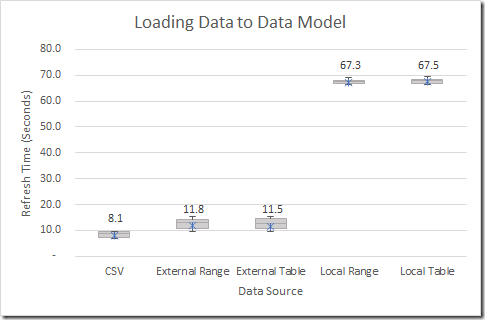

So this got me wondering… is there an impact due to the Data Model? I set it up this way in order to be consistent, but what if I repointed all of these so that they loaded into tables instead of the Data Model?

Here's the results of those tests - again in a Box & Whisker chart. The data labels are calling out the average refresh time over those 10 tests, and the error bars show how much variation I experienced (the largest spread being about 2.3 seconds):

To be honest, I actually expected loading to a table to be slower than loading directly into the data model. My reason is that Excel needs to set up the named ranges, table styles and such, which the Data Model doesn't really need. And based on these tests, it's actually supports that theory to a degree. When loading from CSV it was almost 10% faster to go direct to the Data Model (8.1 seconds) rather than to a worksheet table (8.8 seconds). (There is also virtually no difference in the refresh times for CSV, so it's quite consistent.)

Loading from tables and ranges in other workbooks also saw some slight performance gains by going directly to the Data Model, rather than landing in an Excel table.

But the real jaw dropper is the range and table from the current workbook. Now don't get me wrong, I can't see ever needing to grab a table and load it to a table with no manipulation, that's not the point here. All I was trying to do is isolate the Data Model overheard.

What is with Excel Tables and the Data Model?

So what is with Excel Tables and the Data Model? I'm actually not sure. I've always felt that Power Pivot adds refresh overhead, but holy smokes that's crazy. And why it only happens when reading from a local file? I don't have an answer. That's the last place I'd expect to see it.

So what do we do about it?

If performance is a major concern, you may not want to pull your data from an Excel table in the same workbook. Use a workbook to land the data in an Excel Table, then save it, close it and use Power Query to pull that into the Data Model. If you're pushing a million rows it may be worth your time.

Something else I tried, although only in a limited test, is landing my query in a worksheet then linking that table to the Data Model. Oddly, it doesn't seem to have a huge impact on the Data Model refresh (meaning it doesn't have the massive overhead of loading from table to the Data Model via Power Query.) Of course, it limits your table to 1,048.575 rows of data, which sucks.

I'm not sure if this is a bug or not (it certainly feels like one), but it certainly gives you something to think about when pulling data into your Power Pivot solution.

Working around the issue...

First off, thanks to AlexJ and Lars Schreiber for this idea... they asked what would happen if we pulled the data via Excel.Workbook() instead of using the Excel.CurrentWorkbook() method in Power Query. (The difference is that you get Excel.Workbook() when you start your query from Get Data --> Excel, and you get Excel.CurrentWorkbook() when you start your query via Get Data --> From Table or Range.)

Using Excel.Workbook() to pull the contents from itself, in a single test, returned results of 11.4 seconds, which is right in line with pulling from an external source. So it's starting to look like Excel.CurrentWorkbook() doesn't play nice with the Data Model. (It doesn't seem to have an impact with loading to tables, as shown above.)

Of course, one big caveat is that Excel.Workbook() doesn't read from the current data set, it reads from the most recently saved copy of the file.

So this gives as an opportunity here... if we cook up a macro to save the file, then refresh the query via the External connector, we should get the best performance possible. To test this, I cooked up a macro to save the file, then refresh the data via the Excel.Workbook() route. In two tests I ended up at 12:02 seconds and 12:39 seconds, so it looks like it works. While that's not an extensive study, the saving process only adds a bit of overhead, but it's certainly made up by avoiding the refresh lag.

Here's a copy of the macro I used to do this:

With ActiveWorkbook

.Save

.Connections("Query - Current_via_ExternalLink").Refresh

End With

18 thoughts on “What is with Excel Tables and the Data Model”

Very interesting. I ranked all methods in initial order (you too?), and all result's is a real "jaw dropper".

My first thought was that there is a "let's save a temp file somewhere and then pull table from it as from external" under the hood.

But it looks like I'm wrong.

It is a bug, I think. At least it is a big performance leak. Definitely, different people wrote these functions.

Ken, will a workbook allow two worksheet tables with 1,044,000 rows each? If so, what is the load timing for a second local table added to the data model? And, does it make a difference if the second table is added after any relationships are established?

This came at a perfect time... I'm currently reading an external XL file with 150K+ rows into PQ. It seems rather slow and was wondering if I imported into a local worksheet if it would be faster. I see that you've done all the testing and I'm best to stick with what I have. 🙂

Good question Randy... I just tried pulling two 1,044,000 row tables from worksheets, appended them and loaded them to the model. I'm not going to test it too many times, but in my initial test, it works out to 130 seconds. So basically double what a single 1,0444,000 row table was. So it's not something like overhead to open the data model for processing, it appears to be something that slows down the process line by line. Yuck.

@Nick, it really depends if you want more time to go get a coffee while you're waiting for it to process. 🙂

Very interesting. I wonder if iits related to a closed Excel file being zipped and therefore something to do with data compression performance in the data model. Curious

No idea, Wyn. Could be. I've forwarded it to a couple of key players on the Excel team to look at, as it seems like a pretty big bug to me. I've built lots of models that pull from internal Excel tables, and I know many users who have as well, so I'm pretty sure this will affect a lot of users.

That's...Just mind-boggling. I would have thought CSV was the fastest for the reasons you outlined, but never would have guessed the local problem. I have run into that from time to time, but I always thought it was the PowerPivot calculations I'd already put in that were adding the refresh time.

Thanks for sharing. I know nothing is being "calculated" but if you turn off calculation does that have an impact? The external files are closed and so are not calculated whereas the current file is open and maybe the calculation engine wants to get involved.

No luck there, Neale. Even with calc in manual mode it still takes virtually the same amount of time. (I can't say I'm super surprised by this, as the model doesn't have any DAX measures, Pivots or Cubes. I set it up to just load to the model, with nothing else, so calc really shouldn't be a factor.)

Something related perhaps to the difference between Excel.CurrentWorkbook() and Excel.Workbook() ??

You could try a test to read a local table using Excel.Workbook(File.Contents(xxx)) to see if it outperforms Excel.CurrentWorkbook().

(And come to think of it, what of we could read the data model from another workbook?)

Wow.... @Alex, using Excel.Workbook() to pull the contents from itself, in a single test, returned results of 11.4 seconds, which is right in line with pulling from an external source. So I'm starting to come to the conclusion here that Excel.CurrentWorkbook() doesn't play nice with the Data Model.

Of course, Excel.Workbook() doesn't read from the current data set, it reads from the most recently saved copy.

Just for grins, I cooked up a macro to save the file, then refresh the data from the Excel.Workbook() route. In two tests I ended up at 12:02 seconds and 12:39 seconds. So while that's not an extensive study, it looks like it only adds a bit for the save time, which certainly makes up for avoiding the refresh lag.

Oh... an I wish we could read the data model from another workbook. Unfortunately, to my knowledge, there's no way to do that. 🙁

Hi Ken, regarding your comment above:

Ken Puls on September 8, 2017 at 7:58 am said:

Oh... an I wish we could read the data model from another workbook. Unfortunately, to my knowledge, there's no way to do that. ?

Would a combination of building the data model in Power BI publishing that data model to http://www.PowerBI.com, and then connecting to THAT hosted dataset as a connection (using Analyze with Excel)?

Not sure if that would work, thinking out loud a little...

~ Chris H

Pingback: Set of CSVs as a database for Power BI – Ivan Bond's blog

Hey Chris,

Not really. Would add a lot of overhead, and besides, the data reads from Power BI like SSAS cubes... it doesn't really expose the full data model.

Ken, I just noticed that ThisWorkbookDataModel is an OLE DB Connection in the Excel 2016 Queries and Connections panel. Also the button to [Export Connection File] works. (I don't actually know how to use connection files).

Does this offer any opportunity for reading the data model data externally?

Not sure Alex. I know I can read some stuff through VBA, but in my experiences I haven't been able to tap into the data (although it's been a while since I tried.)