One of the updates in the latest Excel 2016 Insider preview build is a new Combine Binaries experience for Power Query. This is both good and bad, and in this post we'll take a detailed look at both sides before it hits you in your Excel implementation. (With this new feature in the O365 Fast Insider preview and with it already existing in Power BI Desktop's November update I'd expect to see this show up in the January Power Query update for Excel 2010/2013.)

A Little History Lesson

Before we start, let's get everyone on the same page. If you didn't know this, the Combine Binaries feature is one of the coolest things in Power Query or Power BI desktop, as it let you do a one click consolidation of "flat" files (txt, csv and the like).

How Combine Binaries (Used to) Work

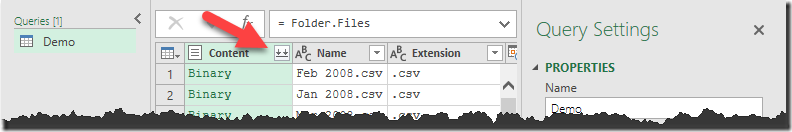

In order to make this work, set up a new query to get data From File --> From Folder, browse to the folder that contained your files, select it and clear the preview window. At that point all the magic happens when you click the Combine Binaries button at the top of the Content column:

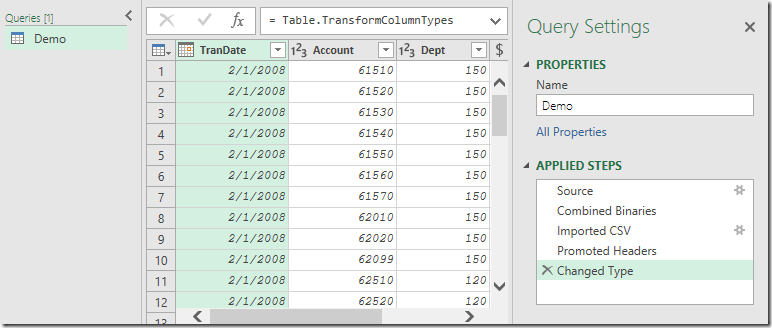

That "one-click" experience would take your source folder and add four steps to your query:

And it puts it all together nicely. So cool, so slick, so easy.

Why did we need a new Combine Binaries experience?

So the first real question here is "Why even modify this experience at all?" As it happens, there were a few issues in the original experience:

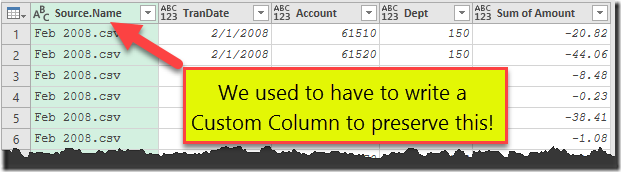

- You lost the original file name details. If you wanted to keep that, you needed to roll your own custom function.

- Headers from each file were not removed, so you'd have to filter those out manually.

- It could only work with flat files (csv, txt, etc..) but not more complex files like Excel files.

So for this reason, the team decided to build a more robust system that could deal with more files and more data.

The New Combine Binaries Experience

So let's look at what happens in the new experience. We start the same way as we always did:

- Set up a new query to get data From File --> From Folder

- Browse to the folder that contained your files, select it and click OK

- Click Edit at the preview window

- Click the Combine Binaries button at the top of the Content column

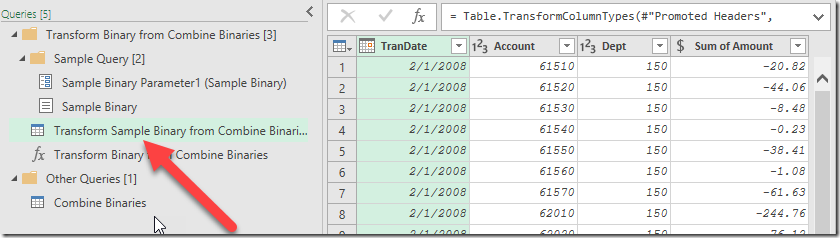

At this point a whole bunch of crazy stuff now happens, and your query chain looks totally different than in the past:

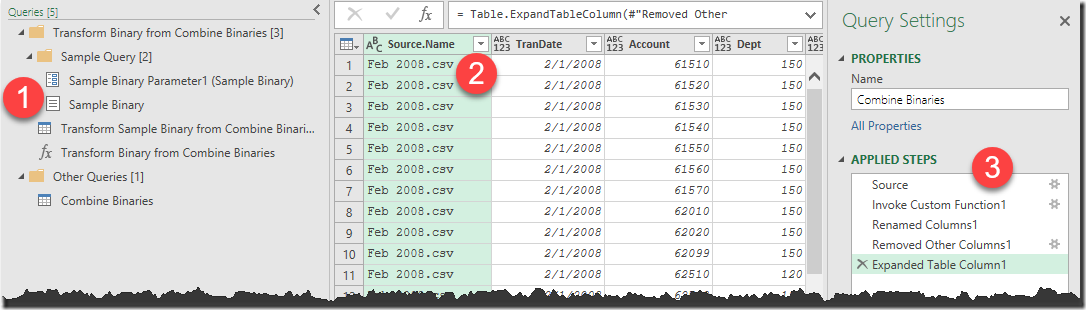

There are 3 main areas that are different here:

- A whole bunch of new queries and parameters with very similar names,

- The original source name is retained

- Different query steps than in the past

If your first reaction to this is being overwhelmed, you're not alone. I'll admit that my first reaction to this was not a happy one. There is a huge amount of stuff injected in the file, it's difficult to follow the relationship in the M code (even if you do know how to read it all), and it isn't intuitive as to what do to with it.

At the end of the day, the biggest change here is that things happen differently in the past. In the original implementation of the Combine Binaries feature set, it combined the files first, then applied some other Power Query steps.

The new method actually examines the individual files, formats them via a custom function, then appends them. This is very different, and it actually gives us a bit more flexibility with the files.

What's the end effect that will be different for you? Simply this:

- More queries in the chain (maybe you don't care), and

- The file name is preserved by default (which was not there in the past)

Now if you are good with everything here, then no worries. Continue on, and you're good to go. But what if you want to make changes?

Making Changes in the new Combine Binaries Experience

The biggest challenge I have with this new implementation is that if you are a user hitting this for the first time, how and what can you change?

Changing or Preserving File Details

What if I wanted to keep more than just the file name… maybe I wanted to keep the file path. You'll be surprised, but this is actually pretty easy now. Since Power Query wrote a custom function for us to import the data, all the file properties were already preserved for us. But it also made a choice to keep on the source file name.

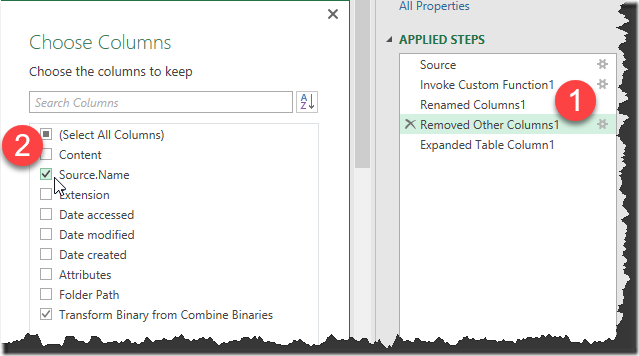

To modify this, we just look at the steps in the Applied Steps window, click the gear next to "Removed Other Columns", and choose the ones we do want to keep:

So in this case, I can actually uncheck the box next to Source.Name and remove that from the output. (We want to keep the very last step, as that is what actually appends the data from the files).

Also… after looking at the Applied Steps window the Renamed Column1 step was only in place to avoid a potential name conflict if you had a column called Name (there is a really good reason for this which I'll look at in another post.) In this case it is totally unnecessary, so we can just delete it.

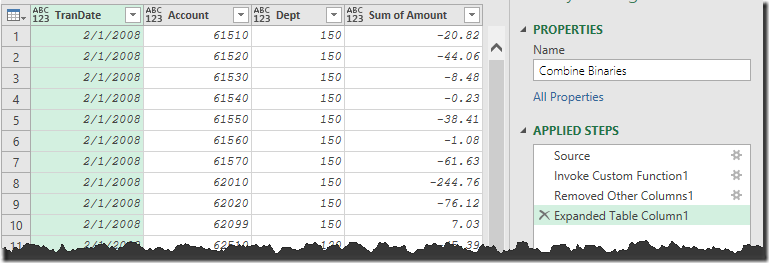

So now our code looks as shown below, and the output looks similar to what we would see in the old experience:

Notice I said that it looks similar - not that it is identical. This is actually better as there are no headers repeating in the data at all, so we don't need to get rid of those. That is an improvement.

Modifying the Import Function

Now, that's all good for the details about the file, but what about the data? What if I only want records for department 120?

To understand the impact of this next piece, we need to understand that there are two ways to combine data:

- Bring ALL the data into Excel, then filter out the records you don't want

- Filter out the records you don't want, and ONLY bring in the ones you do

Guess which will consume less resources overall? Method 2. But the challenge here is that Power Query encourages to use Method 1. You're presented with a full table that is just begging you to filter it at this point… but you are better to deal with it using Method 2… only it's not obvious how to do that.

The kicker here is that the logical flow of Power Query's Applied Steps window has been interrupted with the "Import Custom Function1" step. And if you've ever used Power Query before, you know that modifying a Custom Function is far from a beginner friendly thing to do.

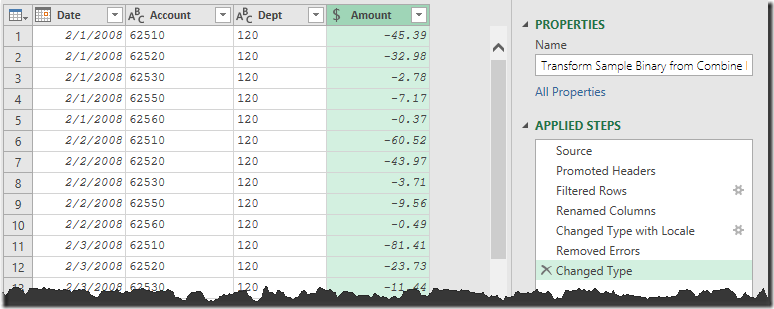

As it happens though, the Power Query team has given us a way to easily modify the custom function, it's just… well… let's just say that the name isn't as intuitive as it could be:

So this is the secret… if you select the "Transform Sample Binary from Combine Binaries" (what a mouthful!) it takes you to the template that the function is actually using. To put this another way… any changes you make here will be used by the custom function when it imports the data.

So here's the changes I made in the Transform Sample:

- Removed the Change Type step

- Filtered Dept to 120

- Renamed TranDate to Date

- Renamed Sum of Amount to Amount

- Change Type with Locale on the Date column to force it to US dates

- Removed Errors from the Date column

- Change Type on the other columns

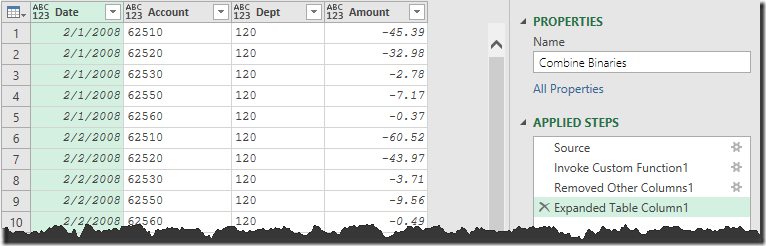

In other words, I do the majority of the cleanup work here based on the single file. The end result for the Transform Sample query looks like this:

Hopefully this makes sense. It's a fairly straight forward transformation of a CSV file, but rather than doing the work on the files that have already been combined, I'm doing it in the Transform Sample query. Why? Because it pre-processes my data before it gets combined. And the cool thing? Now we go back to the main query I started with:

The results have already propagated, and we're good to go.

Thoughts and Strategies

Overall, I think the new Combine Binaries experience is a good thing. Once we know how to modify it properly, it allows us some great flexibility that we didn't have before - at least not without writing our own custom functions. There are a couple of things we do need to think about now though.

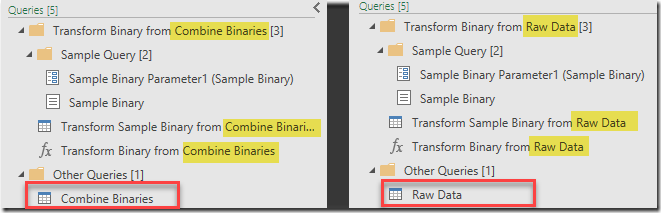

Name Your Queries Early

Under this new method, I'd highly recommend that you change the name of your query BEFORE you click that Combine Binaries button. All those samples on the left side inherit their name from the name of the query, and Power Query is not smart enough to rename them when you rename your query. Here's a comparison of two differently named queries:

So while I used to think about renaming my query near the end of the process, we really need to think about that up front (or go back and rename all the newly created queries later.)

Where Should You Transform Your Data?

The next big thought is where you should run your transforms… should you do it on the master query (the one with the consolidated results), or the sample used for the transformation function?

The answer to that kind of depends. A few instances where this could really matter:

- Filtering: I would always filter in the sample. Why spend the processing time to bring in ALL of the data, only to filter it at the end. Much better to modify the Transform Sample to avoid bringing in the records in the first place.

- Sorting: I would suggest that most times you'd want to run your sorts in the master query. Let's say you have a file for each month, and you sort by date. If you do that it in the master query, things will be sorted sequentially. If you do it in the Transform Sample, it will be sorted sequentially within each file, but if the files are out of order, your master will still be out of order until you re-sort by date. (Apr comes before Jan in the alphabet.)

- Grouping: This one is tricky… most of the time you'll probably want to load all of your transactions into the master query, then group the data. So if I want to group all transactions by month and account code, I'd do it in the master query. But there are definitely instances where you'll want to pre-process your data, grouping the data inside the individual file before it lands in your master query. A case in point might be the daily payments list for payments you issue to vendors. If they are batched up then sent to the bank, you'd want to import the file with all daily records, group them, then land that pre-grouped data into your master query. That is an operation that you may wish to do at the Transform Sample level.

The good news is that it is easy to experiment here and switch things up as you need to.

And One More Major Impact…

This blog post has really focussed on how the new Combine Binaries experience changes impacts importing text files. What it doesn't cover however, is the why it was REALLY built. I'm going to cover this in tomorrow's blog post: how the new Combine Binaries experience allows one click consolidation of Excel files!

17 thoughts on “New Combine Binaries Experience”

I had the same experience as you when I tried this out in Power BI Desktop. A little overwhelming at first, but it makes sense once you dig into it.

One issue that they are planing to fix in February for PBI Desktop is the fact that Change Type transformations are lost in the final output. This also seems to be the case in Excel from your final screenshot where it's reverted back to mixed type.

http://community.powerbi.com/t5/Issues/combine-binaries-on-csv-not-flowing-Type-Change-to-final-output/idi-p/96596

Ken, I'd also be interested in your view on this...

Not sure what the answer is but I don't like the way the sample binary filename gets hard-coded into one of the first few steps

The beauty of consolidate from folder is that it shouldn't care what file names you have in there.

The query still works even if you delete that file used as the sample binary from your source file but that particular step is no longer valid. Easy to fix but not a perfect solution, yet 🙂

This seems to work only in Power BI and not in Excel

@Dave, this is only present in Excel if you're on the Insider Preview Fast build. For others I expect to see it in the January 2017 update.

@Wyn, I totally agree. Why it doesn't use a dynamic pull from the original table, I'm not sure.

Understood. Thanks Ken!

@Wyn, @Ken I posted an idea to have the dependency on a particular file removed: https://ideas.powerbi.com/forums/265200-power-bi-ideas/suggestions/17608318-make-combine-binaries-independent-from-existence

My vote added Marcel

Hi guys, so I ran into an issue lately, I am guessing after recent O365 updates. I have created several models based on the same csv data in single folder, and they work perfectly. Now, creating new models based on the same data, returns all the columns in one single column, meaning its concatenated. Adding new data data to the older models works perfectly, also PBID works fine.

I also just created a few csv files in a small 30x4 grid, in order to test the combine binaries function on completely new data. Again it returs it all in one single column.

This is very frustrating, any ideas are much appreciated.

Can you do me a favour? Post this in our forum at http://www.excelguru.ca/forums. Reason is that you can attach a copy of the test files so we can have a play with it. If it's possible to repro here too, I'll push it off to MS to look at.

Hello, there!

I found this blog from Japan and actually purchase your book "M is for data monkey". Thanks to the tips inside the book, I've been able to improve much of the tedious work but now I have a serious question to ask about this new feature. The problem is once I click the expand button of Content when working with csv or text file, it gives me the corrupted text for letters other than English. So I basically go back to Binary.Combine(previous step[Content]) and then double click the CombinedBinary icon in order to extract the combined dataset which works great as it used to. The things is I'm about to teach our guys in the company with less coding skills so just wonder if there's any other easy way to pull off the data like I just mentioned.

My guess is that you will probably need to go to the Sample Binary Transform, select the gear on the Source step and change it to import as a different spec. (Maybe use text file instead of CSV or change the character encoding). What you do there should translate for the combination.

Without seeing your data I can't say for sure, but that's the route that I would approach it.

Ken

Thanks for the advice. Though I still cannot get around it even I did try what you mentioned which I think and hope the PQ team would make it work in the future update. Until then, guess my best option is to stick to the old way Binary.Combine() way to pull up the data.

BTW, it's was really a great help of your tips from the book that I could shorten a full-day routine job to a few minutes "coffee break". Thanks!

Ken

Just want you to know the broken text bug has been fixed by the latest update on PQ. Though I still find it confusing to comprehend the newly added "sample query groups".

Thanks Marshal. I agree on the query groups, it's a bit overwhelming. Maybe I'll do up a specific post on understanding how this flows from one to the other.

That would be great Ken. I have had some struggles recently with trying to make modifications after the function groups are built.

Hi Ken,

I've just run into a problem when i try to do another combine binary of the same folder (after renaming queries in the first one), and Evaluate Query step goes on forever and never finishes.

Is the problem caused by renamed queries in Sample Group?

Or is it that i can only do it once with the same folder?

Thank you

Lana

Hi Lana, probably best to post this one in the forums at http://www.excelguru.ca/forums. Ideally, if we can see your queries there (if you can upload a sample workbook), it would make it much easier to debug.