How we reduce development lag when building queries in Power Query is a question that came up in my blog post at PowerPivotPro last week, even though that wasn't the main issue. I thought it might be a good idea to throw out a development methodology that can help with this if you're struggling in this area.

Also, if you haven't read "Why Excel's Power Query Refresh Speeds Suck" on Rob's site, please do, as it really highlights a key issue that can affect Power Query refresh times, and we really need you to vote it up.

Why do we need to reduce development lag?

This isn't a problem that affects small data sets, but the bigger the data set is, the more you feel it. The issue comes up because - during query development - Power Query doesn't load all of the data into the local system. (If it did, you'd REALLY scream about crappy performance!) What it does instead is pull in a preview of the data.

The number of rows varies based on the number of columns and data types, but for now, let's pretend that the Power Query preview:

- pulls exactly 1000 rows

- your data set is 70 million rows

I do want to be clear here though… this issue isn't restricted to data sets of this size. If you're consolidating 30 CSV files with 50k rows each (a total of 1.5 million rows), you're certainly going to feel this pain. Why?

To illustrate this, let's go through a workflow:

- You connect to the data set

- Power Query pulls the first 1000 rows and load them to your preview

- You filter out all records for dept 105, removing half the rows in your data set

At this point, Power Query says "Hey, you eliminated half the rows. I'll go pull in some more for you, so that you can keep operating on a full preview window." It goes back to the data source, and essentially streams in more data, tossing the records you don't need, in order to fill up the preview of 1000 rows.

Now you drop 20 columns, allowing the data set to expand to 1200 rows in the preview. Naturally, it needs to go back to the data set, pull values again, run it through all the steps to date in order to land you 1200 rows.

Now to be fair, I'm not certain if something like a "Change Type" or "Replace Values" step causes a refresh, but it very well may. On large data sets it certainly feels like it does.

If you're not nodding your head at this point, I can pretty much assure you that the data sets you've been working with are tiny. The bigger those sets get, the more this hurts.

And before anyone says "why not just add a Table.Buffer() command in there?"… in my experience this doesn't help. From what I've seen, if you are in dev mode, this causes the buffering to get re-executed, which just adds time to the process.

A Strategy to Reduce Development Lag

So how do we reduce development lag and avoid the time wasted when developing our query chain? Here's a strategy that may work for you. It's a 5 step process and requires Excel, although you could certainly develop in Excel then copy your queries to Power BI Desktop afterwards. The reason? Power BI has no grid to land your data to…

Step 1: Connect to the Data Source

The first part is easy. You simply connect to your data source, whether that be a file, database, folder, or whatever. The key thing here is that your output needs to be "flat". (No nested lists, tables, binaries or values in any columns.) Each column that contains nested lists/tables or values needs to be expanded before you can move to step 2.

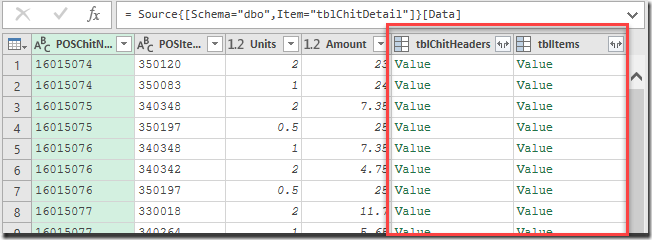

In the example below, you can see that I pulled data from a database which has related fields:

As the related "Value" is a complex data type, it needs to either be removed or expanded. In this case, I wanted some data from within the tblItems, so I performed the following steps:

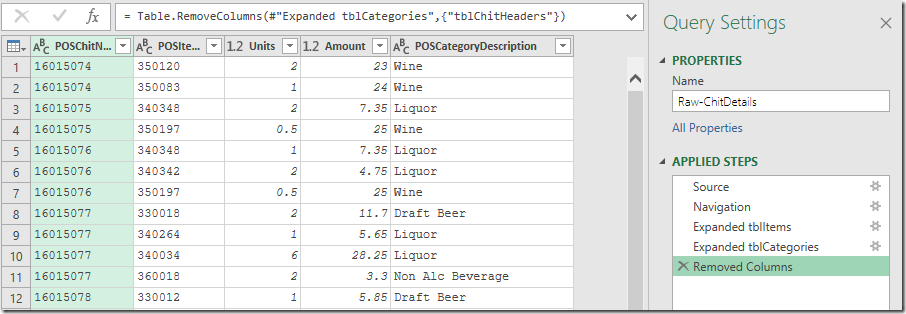

- Expanded the tblItems column to extract the tblCategories column

- Expanded the tblCategories column to extract the POSCategoryDescription field

- Removed the tblChitHeaders column

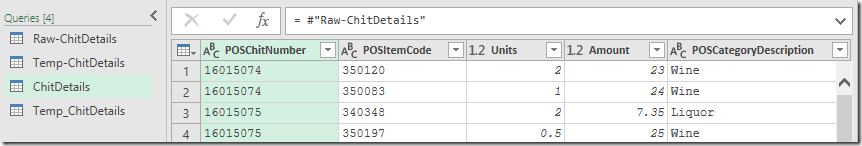

And the result looks like this:

And now, let's pretend that every step I add is causing the preview to refresh and taking ages to do so. (Ages is, of course, a relative term, but let's just assume that it has exceeded my tolerance for wait times.) So what can I do?

What I do now is:

- Stop

- Give the query a name like "Raw-<tablename>" (where <tablename> is your table. Mine is Raw-ChitDetails)

- Choose Close & Load To…

- Load to "Connection Only"

Once done, I can build myself a temporary stage to reduce development lag during the construction stage.

Step 2: Reference the Data Source query and land to an Excel Table

To create our temporary stage, we go to the Queries pane, right click the Raw-ChitDetails query, and choose Reference. (And if you've read the post on Why Excel's Power Query Refresh Speeds Suck, you're cringing. Go vote if you haven't already).

When launched into a new query, you're going to do two things and two things only:

- Rename the Query to something like "Temp-<tablename>" (again, where <tablename> is your table. I'm using Temp-ChitDetails.

- Go to Home --> Keep Rows --> Keep Top Rows --> 10,000

And now, go to Home --> Close & Load and choose to load to an Excel table. This is your temporary data stage.

The key here… never do more than just the Keep Top Rows part. You don't want to do any additional filtering or manipulations in this query. You'll see why a bit later.

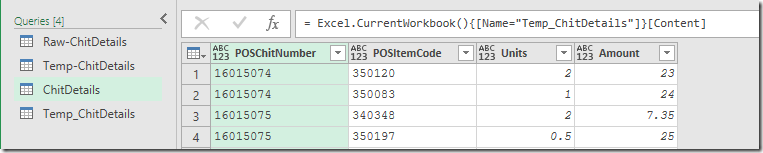

Step 3: Load the Excel Table to Power Query and develop your solution

Next, you'll need to click a cell in the new table, and choose to load from Table or Range. This will create you a new query that you can start to manipulate and do the cleanup you want.

The beauty here is that the table you've loaded from Excel needs to be manually refreshed, so we have essentially frozen our data preview. The preview will still get refreshed (we can't avoid that), but since the data has been loaded to a worksheet in Excel, Power Query will treat that table as the data source.

The reason we needed 10,000 rows is to have enough data to identify the data patterns we are working with. If that's not enough because you filter out larger quantities, then up the 10,000 to something larger or filter in the Raw query (and suffer the lag time there) before loading to the table.

So this is the query where you do all of your development work. With a smaller "frozen" data set, this should allow you to work while suffering much less refresh lag time.

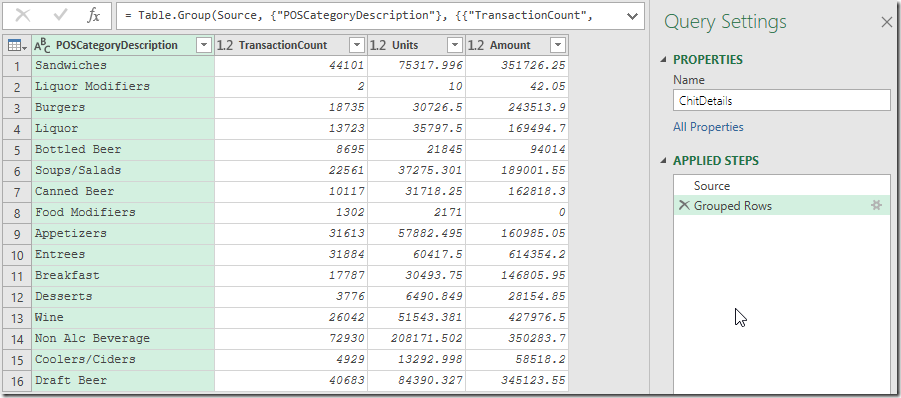

As a sample here, I ran a Group By on the smaller data set. Is that a good query to illustrate this? Probably not, I just wanted to show that I did something here.

So this query only has two steps, but hopefully you get the idea that this could be a few dozen (or more) steps long, each taking MUCH less time to update than working against the whole data set.

Quick note… I deleted the Changed Type step that is automatically added when pulling data from an Excel table. I did this as the Changed Type step often breaks Query Folding when you pull from a database.

With my development work done, I've named my query with the name I want to use for the final load destination. In this case it's "ChitDetails", and set it to Close & Load To… --> Connection Only and Data Model to load the table for Power Pivot.

Of course, this query is only working against my frozen 10,000 row data set, which isn't going to cut it long term, so I need to fix that.

Step 4: Re-point the working query against the Data Source query

With the query loaded, I'll go back and edit it. The key is that I need to select the Source Step. In the formula bar we'll see the code used to call from the Temp data table:

This formula needs to be updated to pull from the Raw-ChitDetails table, so I'm going to do exactly that:

Notice that the formula is actually:

=#"Raw-ChitDetails"

This is to deal with the fact that I used a hyphen in the name, so it needs to be escaped with the hash-quotes in order to read correctly. If you forget to do that and just type =Raw-ChitDetails, you'll be told you've got a cyclical expression.

At this point, you've done your development work more quickly, and have now pointed back to the original data source. (This is exactly why we only restrict the number of rows in Step 2. If we did more, we'd need to retrofit this query.)

When you click Close & Load now, it will load to the data source you chose earlier. (Power Pivot for me.)

Step 5: Delete the Temp Queries and Excel Table

Notice that we actually have two Temp queries now. The first was used to reference the Raw query and land the data in the Excel table. The second was pulling from the Excel table into Power Query. Both can now be deleted in additional to deleting the worksheet that held the temporary table as their jobs are done.

What if I need to do this again?

Easy enough. You can simply:

- Create a new query by referencing the Raw table. (Queries Pane --> Right Click --> Reference)

- Name the query Temp-<tablename> and Close & Load to an Excel table

- Select a cell in the table and create a new query From Table or Range

- Rename this new query as "TempStage" (or something) and choose to Close & Load To… --> Connection Only

- Edit your "final" query's Source step to =TempStage. (You only need the #"" if you include a character that Power Query uses for something else.

And at that point you're back in development mode.

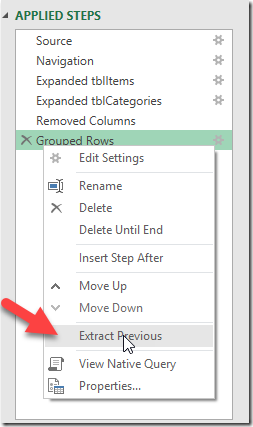

What if my Query is already several steps long? Do I have to start over?

Absolutely not! If you've been working away building a very long query, you can split them apart into the staging setup I used above. Open your query and walk through the steps to find where you wish you'd broken them apart. Right click that step and choose "Extract Previous"

You'll be prompted for a new query name, so enter "Raw-<tablename>". What this will do is extract all the previous steps into a new query for you, and set your current query's Source step to point to that query. Then you can follow the steps outlined in the previous section to get back into development mode.

What about Power BI Desktop?

The problem with Power BI desktop is that it has no grid to land the data to, which means we can't get that "frozen preview" setup. But we can adapt the solution to use Excel if you've got Power Query in Excel. You do this by:

- Creating the same "Raw Data" stage in Power BI Desktop

- Reference the Raw Data query to get into your "Final" query

Then create your data stage:

- In Excel, replicate the Raw Data staging query EXACTLY as it is in Power BI desktop

- Filter the query to 10,000 rows

- Close & Load to an Excel table

- Save & Close the file

Back in Power BI Desktop

- Create a new query to pull from the data in Excel

- Repoint your "Final" query to reference the Excel query

- Do your development

- Repoint to the original query when done

It's a bit clunky, but if you're really suffering performance, you may find it worth it.

Should Previews Always Refresh?

One thing I've thought about setting up a UserVoice item for is a setting in the Excel/Power BI UI that allows me to turn off preview refreshes until I click the button inside the PQ editor. My initial thought is that this would help, as it would essentially cache the preview, and we could operate on it without tripping a recalculation to slow things down.

Naturally, when we first create the query, it would need to create a preview. But after that, I would hope that it just worked with the data loaded in the preview until I hit the Refresh Preview button. That would be the key to re-execute all query steps to date - in full - to generate a revised preview.

Having said that, I have a feeling that this would be pretty complicated to implement. Some steps (like combine binaries and grouping) would require the preview to be updated or you'd get nothing. But others, like filtering rows out, really shouldn't. It could require a significant change to the underlying architecture as well.

Regardless, something to "freeze" the preview would certainly be welcome as the development experience with larger data sets can certainly be painful.

8 thoughts on “Reduce Development Lag”

Ken, I've been using temporary queries for a while, although for "tiny" data sets.

My question, would it be successful to just limit the incoming data set to 100,000 rows using query folding during the dev process, and then remove later?

Sorry - I meant to say 10,000 rows

Hey Alex,

I would think that should work if you have a database that can take advantage of query folding, yes. If you don't, then you're stuck doing it the long way.

Great article. Development is very painful. I have seen PQ hang for a bit while it refreshes *something* when I rename a step. I almost never leave the default step names as is because they are unhelpful later on. Why renaming causes a lag I'll never know, but it does!

What we need is something like Disconnect Table

Similar to a Linked Table in Access - Where the Data is then locally cached.

We can then do all the development with data stored in cache and once done its a matter of pointing the Source back to the live source.

Yes Sam, that would be fantastic!

Hi Ken, great read, But can you please start making videos for these tips in future? it will be far easy to understand when you see things visually !!

Hi Prashant,

I could, but appreciate that I'm providing all of this information for free. I may do this in future, but it will be in the style of a chargeable course like I host here: https://courses.excelguru.ca