One of my favourite tricks to use on a dashboard is to show the Last Refreshed Date. This is important as it lets you know how stale your data is. I think it’s even more important with Power BI, as it tells you if your automatic refresh is actually working!

Last Refreshed Date for Power Pivot

I’ve actually covered this topic in the past for Power Pivot solutions. You can find that article here if you’re interested.

Display the Last Refreshed Date in Power BI

Generate Last Refreshed Date with Power Query

Unlike the previous article, to work with Power BI, we need to generate the Last Refresh date ourselves using Power Query. No big deal, it is as simple as this:

- Open PowerBI Desktop

- Get Data –-> Blank Query

- Go to Home –> Advanced Editor and replace the code in the window with this:

let

Source = #table(type table[LastRefresh=datetime], {{DateTime.LocalNow()}})

in

Source

- Click Done and rename the query “LastRefresh_Local”

- Click Close & Apply

This code makes a nice little table that returns the current date and time each time we refresh the solution.

Create the Last Refreshed Measure

To finish it off, we need to create a simple measure and add it to our dashboard.

- Go to your Report window –> New Measure –> Define it as follows:

Last Refreshed (Local) = FORMAT(LASTDATE(LastRefresh_Local[LastRefresh]),"mmm dd, yyyy hh:mm:ss AM/PM")

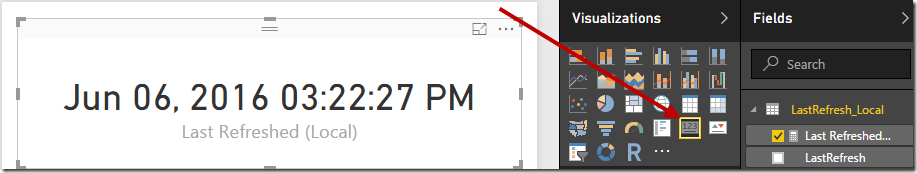

- Now let’s create a Card visual to hold it:

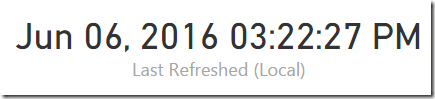

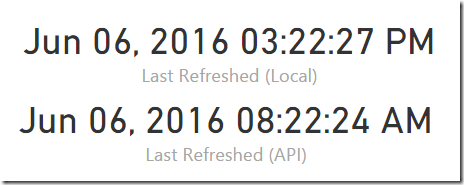

So far everything looks really good here. The card is formatted nicely, and I updates to the current date and time every time I hit the refresh button. It’s a thing of beauty! What could possibly go wrong with this?

Publishing to the Power BI Service

Encouraged with our visual, we’ll now publish it to Power BI:

- File –> Publish –> Publish to Power BI

- Login to the Power BI service and scroll down to the Datasets area

At this point you should click the little … to the right of the dataset you uploaded (whatever you called it) and click Refresh. It will prepare for a bit, then should refresh. If your experience is anything like mine, your report shows this:

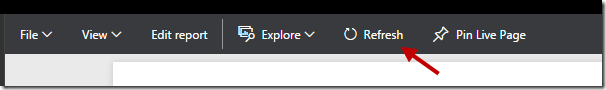

At first, you think “Hey cool!” until you realize that this is the data – to the second – of your original upload. So even after you refresh the data set, you need to refresh the report:

And when you do, you get this:

Um… WHAT? That’s 7 hours different! How is this possible?

So I’m in the Pacific time zone, which is currently UTC-7. (We are UTC-8 when we are not on daylight savings time.) So apparently the date that is showing here is giving me the UTC date.

This really sucks, obviously. I was really hoping that the dates and times would show based on my browser settings or something, but obviously not.

I tried a bunch of things to fix this, including forcing the column to a DateTimeZone data type, but that made the DAX formula choke, as it only accepts DateTime data types. I tried various combinations using DateTimeZone.UtcNow(), DateTimeZone.LocalNow(), adding periods, subtracting them and more. The killer though was that as soon as the time zone got removed (which is necessary for the DAX measure to work), Power BI displayed the time assuming it was UTC. So the issue I’m seeing here is that – no matter the DateTime you feed Power BI – it thinks it has the same time zone as the server. Not so good.

How to Display the (Correct) Last Refreshed Date with Power BI

Just to be clear here, the title above is actually a heinous misrepresentation. The timestamp was correct, it’s just being interpreted incorrectly by Power BI (but not Power BI desktop). So I had to fake it out.

I reached out to Chris Webb for his thoughts, and he suggested hitting an API. I’d already tried looking for a page, but picked up on this API which turned out to be quite useful: http://www.timeapi.org.* Even more interesting is that both www.timeapi.org/pst and www.timeapi.org/pdt return the same thing. I’m hoping that this means it is going to allow me to survive daylight savings time with no adverse impacts…

*Update: Please note that www.timeapi.org is no longer available. While the particular example demonstrated below will no longer work, the concept itself is still valid.

So armed with that, I decided that the only way I was going to get this to work was to pull back the UTC offset, and add it to my date. That effectively returns a bogus date that Power BI will represent in UTC to match my time zone. Complicated, no?

Collecting the Current PST Date/Time

I don’t think I’m going to clearly document all the steps for this, as that would make this article really long. Instead, here’s how you can implement this:

- Create a new Blank query and paste this code in the Advanced Editor

let

Source = Web.Page(Web.Contents("http://www.timeapi.org/pst")),

Data0 = Source{0}[Data],

Children = Data0{0}[Children],

Children1 = Children{1}[Children],

#"Removed Other Columns" = Table.SelectColumns(Children1,{"Text"}),

#"Inserted Last Characters" = Table.AddColumn(#"Removed Other Columns", "Last Characters", each Text.End(Text.From([Text], "en-US"), 6), type text),

#"Changed Type2" = Table.TransformColumnTypes(#"Inserted Last Characters",{{"Text", type datetimezone}}),

#"Replaced Value" = Table.ReplaceValue(#"Changed Type2",":00",".0",Replacer.ReplaceText,{"Last Characters"}),

#"Replaced Value1" = Table.ReplaceValue(#"Replaced Value",":30",".5",Replacer.ReplaceText,{"Last Characters"}),

#"Changed Type1" = Table.TransformColumnTypes(#"Replaced Value1",{{"Last Characters", type number}, {"Text", type datetime}}),

#"Added Custom" = Table.AddColumn(#"Changed Type1", "LastRefresh", each [Text]+#duration(0,Number.From([Last Characters]),0,0)),

#"Removed Other Columns1" = Table.SelectColumns(#"Added Custom",{"LastRefresh"}),

#"Changed Type" = Table.TransformColumnTypes(#"Removed Other Columns1",{{"LastRefresh", type datetime}})

in

#"Changed Type"

- Click Done and rename the query as “LastRefresh_API”

- Click Close & Apply

The basic gist is that it does the following:

- Pulls the web page and drills into the single cell we need

- Splits off the time zone information, replacing the minutes with fractional hours and turns it into a decimal number

- Extracts the original date/time (without the time zone) and adds the time zone offset to that value. This essentially subtracts 7 hours from the original date for me right now

- Performs as bit of cleanup to drill into the faked date/time we need

(If you want to use this for your time zone, I believe all you should need to do is change the “pst” portion of the URL to your time zone name.)

Create a new Last Refreshed Measure

Creating this measure is pretty much the same as the last one.

- Go to your Report window –> New Measure –> Define it as follows:

Last Refreshed (API) = FORMAT(LASTDATE(LastRefresh_API[LastRefresh]),"mmm dd, yyyy hh:mm:ss AM/PM")

- And create a Card visual to hold it

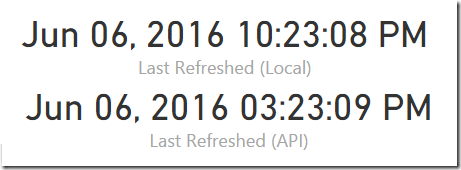

You should now have this odd looking contrast:

Great, except that… it is 3:22 PM for me, not 8:22 AM. But fine, whatever, let’s publish this and see what happens.

Publishing to the Power BI Service

To publish the file, we take the following steps again:

- File –> Publish –> Publish to Power BI

- Confirm that we want to overwrite the data set

- Login to the Power BI service and scroll down to the Datasets area

- Click Refresh Now

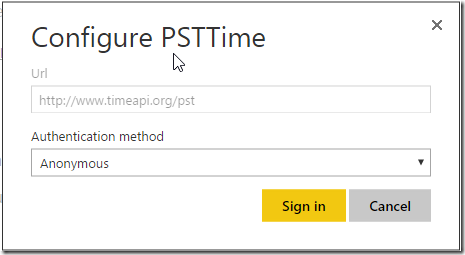

At this point you might be told you can’t as you haven’t provided credentials to the data set. Just Sign In as Anonymous and you’ll be fine:

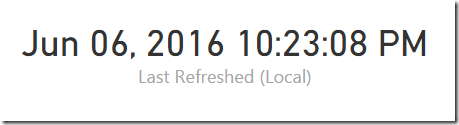

And of course, now we can refresh the Report as well. And when we do…

As you can see, the API version is showing the correct time here, not the Local version.

Takeaways

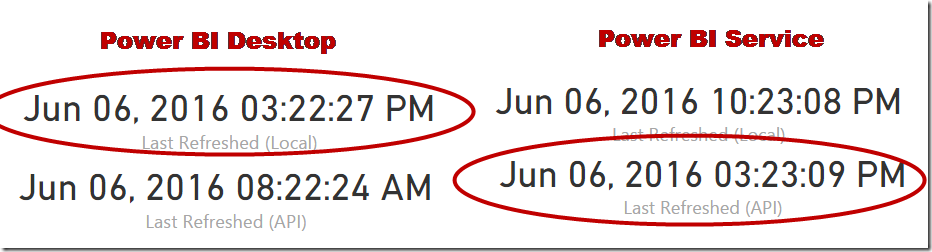

The biggest takeaway here is that you have to care about how your audience is using your files. If you are going to deploy your reports by emailing *.pbix files around the company, the setting up a table using the DateTime.LocalNow() will work great. But if you are going to publish your files to the web… that obviously throws in some wrenches. Here’s a side by side view of those two alternatives:

Probably the most frustrating thing to me is that I was trying to find a solution that will show it correctly in BOTH Power BI Desktop and Power BI Online. There’s nothing worse than working with a report you know is broken depending on where you look at it. 🙁

PBIX File

If you want the file so that you can try it out in your own PowerBI service… here you go. 🙂

50 thoughts on “Display Last Refreshed Date in Power BI”

This is excellent and I can definitely see myself using this once I figure out the visualisation tools!

Great post Ken.

This not only solves the issue you've mentioned. but also solves DAX time zone issue as I mentioned here:

http://www.radacad.com/solving-dax-time-zone-issue-in-power-bi

I'll be honest that I'm still not in love with it though. And I really wish I could read from a browser to pick up the reader's current time zone. That would allow us to display it the dashboard in their time zone.

Hi there Ken, a great post.

It does appear in my instance if I put in the Blank Query make the required changes and upload it to the Power BI Service, it initially works well.

When I try and refresh it using the Power BI Enterprise Gateway it fails for some reason. It says that my Enterprise Gateway is not on-line. But I can confirm that it is on-line for other reports.

Any idea's?

Once I removed the blank query it can see the Enterprise Gateway again.

Thanks

Interesting... I honestly haven't got any idea on this, as I don't have an Enterprise Gateway installed. I just used the standard setup from Power BI without trying to set up a gateway specifically...

Hey Gilbert, it looks like I may have an answer for you here. This is direct from Microsoft: "Combining online and on-prem data source is not supported with the Enterprise Gateway". So my guess is that this is what's happening here, as the URL to the PST time is outside your org. 🙁

No ETA on a fix for this, but you can help vote it up here: https://ideas.powerbi.com/forums/265200-power-bi-ideas/suggestions/13405644-combine-enterprise-gateway-and-online-data-sources

I'm suing a CSV file as a datasource, I would like to pull the Modify date from the file so I can know how old the data is. Can i use this syntax to pull the csv file's modify date?

Not exactly, no. You'd instead go and browse to the folder with the file, and pick up the last modified data from there.

Howdo Ken. here's an interesting question:

I have 6 data files in OneDrive. I'm going to rely on the user to upload those files to that location on a regular basis

My data model will refresh from these files on an hourly basis. As well as showing the hourly and on-demand refresh times I'd actually like to grab the last modified time of the source file. I can then establish that the user has uploaded and overwritten all files necessary and none of them are 'stale'

I suppose I need something like a GetPropertyOfFile(LastModifed) type function thing. Do you know if this is possible?

Hey Anthony,

Since you're pulling data from OneDrive, I assume that you're starting by connecting to the data folder, rather than the individual files within it. That folder view should give you the last updated timestamp of the file. So basically, if it's a text file, you'll want to use the Csv.Document function to extract the data, rather than clicking the double down arrow. That way you preserve the file timestamps. If it's Excel files that you are consolidating, you're already using the Excel.Workbook function to extract the contents, so just make sure you didn't already remove the timestamp column(s) you want.

Yes, precisely that, connecting to a SharePoint folder and files are of type csv or xlsx

Ah, with you, yes can see a timestamp column by stepping through but i'm losing it by the time I get to step 3 below.

ODG & ODG_SD are just parameters denoting path

I'm sure I'm not doing this the best way (it was just what I got working at the time by trial and error). Your advice would be appreciated

let

OneDriveGroup = SharePoint.Files(ODG, [ApiVersion = 15]),

SalesMonth = OneDriveGroup{[Name=SALES_MR,#"Folder Path"=ODG_SD]}[Content],

ImportedCSV = Csv.Document(SalesMonth,[Delimiter=",", Columns=86, Encoding=1252, QuoteStyle=QuoteStyle.None]),

UppercasedText = Table.TransformColumns(ImportedCSV,{},Text.Upper),

Promote = Table.PromoteHeaders(UppercasedText),

Half way through responding and I closed my browser window (sigh).

At any rate, your issue appears to be that you are drilling in to a specific file. You have the Data Monkey book, so check out page 37 on "Preserving File Properties on Import". The big difference is that you need to add a column which converts the binaries from binaries into tables.

Steps should be:

-Connect to folder

-Filter to just the file you want (but don't drill in)

-Add a custom column with formula: =Csv.Document([Content])

-Remove all columns except the custom column and the column with the file properties

-Expand the custom column

Hopefully that helps.

It looks like timeapi.org is down or for some reason won't accept my connections from work or from home. Would be ideal to have a solution not dependent upon a third-party site. Could not the time be recalculated in the script?

Yeah, it sure would. The problem is that because the desktop and server run on different time zones, we either have to hard code the update time and change it when daylight savings time changes, or post the time in UTC and just get used to it. I wish I had a better way.

Even worse is that Power BI now won't allow scheduling the refresh of a Web.Contents call because... security. 🙁

Hi Ken - thanks for the great post. I wanted to share a work-around I used to get over the time zone offset.

In the Query created, I added a custom column:

=Text.From([LastRefresh])

(be sure to change the data type to 'Text' else PowerBI.com will interpret as a datetime)

Here's the updated query:

let

Source = #table(type table[LastRefresh=datetime], {{DateTime.LocalNow()}}),

#"Added Custom" = Table.AddColumn(Source, "Last Refresh (PST)", each Text.From([LastRefresh])),

#"Changed Type" = Table.TransformColumnTypes(#"Added Custom",{{"Last Refresh (PST)", type text}})

in

#"Changed Type"

Then I just added this data field to the Card, and Voila! PBI desktop and PBI site data matches.

Hmm. Caveat on my previous post - when I publish directly from PBI desktop - it works. However when doing the refresh from the PBI service / Gateway, it does not. Sorry for misleading post - I did not test thoroughly before sharing.

Yeah, that's the issue. The local desktop and server are in two different timezones, and the service re-executes the query in it's timezone. 🙁

This is a really useful post. I'm wondering if you've found a new timezone api since timeapi.org appears to be dead? Thanks.

Sorry Riley, I haven't. Part of the reason, to be honest, is that you can't schedule a refresh from a web page in Power BI any more. So I didn't bother hunting.

Pingback: IT Budget Blog Series: Integration of Currency Exchange Rates | Everything about Nothing in a world about Everything

This works for me.

let

Source = Web.Page(Web.Contents("http://localtimes.info/North_America/United_States/Ohio/Dayton/")),

Data1 = Source{1}[Data],

#"Changed Type" = Table.TransformColumnTypes(Data1,{{"Column1", type text}, {"Column2", type text}}),

date = #"Changed Type"{1}[Column2],

time=#"Changed Type"{0}[Column2],

datetime=DateTime.FromText(date&" "&time)

in

datetime

Thanks Evan!

Not sure if this is still relevant, but the solution from here works for me quite well: http://community.powerbi.com/t5/Service/last-time-refresh/m-p/7208#M3415

Hi All

I'd be grateful for any assistance with how to display the last modified date of csv files used as data sources in PowerBI? The Last Refreshed date/time is helpful, although in our situation its more critical we can confirm from within PowerBI that the actual datasource csv files are up-to-date (the CSV files are weekly exports of storage data and PowerBI uses these files datasources).

Hi Anthony,

If you get data from folder (which I assume you are), then the last modified date is one of the built-in properties. If you're losing that column upon combining binaries, just go back to the step RemovedOtherColumns1, click the gear, and you should be able to preserve that column.

Hi Ken

Thanks very much for the reply. The PowerBI gets the csv files from our O365 sharepoint site. We are pulling in three separate CSV files. In each case, we use the "Get Data > Web" option for the datasource. Each URL is the full path to each individual csv.

Given the CSV files are generated at slightly different times, would be good to report on the individual last modified date of each CSV file in PowerBI. Just wondering if you know if this is possible and how I might go about it.

Hi Anthony,

Okay, so first problem is that you are picking up data "From Web". You should be picking up data using the Sharepoint connectors instead. Then you'd be able to see the entire list of files, sort and filter by dates and pick up the latest. Have a read of this post, as it may help with the Sharepoint options: https://excelguru.ca/2016/09/07/get-data-from-onedrive-for-business/

Hi Ken,

very useful post.

I think the reason of not taking the local datetime but the UTC time is not due to a bug but the direct consequence of where the code you wrote is running.

I mean: the local time for PowerBI desktop is your local time, but the local time of the server where the report has been refreshed online is (maybe) the UTC.

Because your DAX is evaluated during the dataset refresh once for all and not at viewing time once per each viewer.

I think the only "global" solution can be to calculate the UtcNow at refresh time (in your DAX) and show the result (UTC) DateTime in a custom-visual able to show at view-time the local DateTime (based on the client-ip?) from the UTC DateTime of the behind data field.

My 2 cents

Pingback: Power BI: Include ‘last refresh’ date and time in a report – Detailed Files

Hi Ken,

Thank you for the post and it was so useful.

I found a workaround to calculate the time based on UTC and timezones.

let

Source = #table(type table[LastRefresh=datetime], {{DateTimeZone.SwitchZone(DateTimeZone.UtcNow(),10,0)}}),

#"Split Column by Delimiter" = Table.SplitColumn(Table.TransformColumnTypes(Source, {{"LastRefresh", type text}}, "en-AU"), "LastRefresh", Splitter.SplitTextByEachDelimiter({"+"}, QuoteStyle.Csv, true), {"LastRefresh", "LastRefresh.2"}),

#"Changed Type" = Table.TransformColumnTypes(#"Split Column by Delimiter",{{"LastRefresh", type datetime}, {"LastRefresh.2", type time}}),

#"Removed Columns" = Table.RemoveColumns(#"Changed Type",{"LastRefresh.2"})

in

#"Removed Columns"

-----------------------------------------------------------------------------------------------------------

DateTimeZone.UtcNow() gets the current UTC and i have used DateTimeZone.SwitchZone to calculate the time relative to UTC.

Thanks for the info

Thanks so much for sharing

Here is another formula that has worked to change the time zone. Create a new measure:

Refresh Time (PDT) = FORMAT(LastRefresh_Local[Last Refreshed (Local)] - TIME(7,0,0), "mmm dd, yyyy hh:mm:ss AM/PM")

@Brandon, this works, the only challenge is that when daylight savings time changes, you need to update the formula to reflect PST instead of PDT. (Working with times is not easy!)

Hi Ken, I'm trying to create a new measure as "now()" to show the last refresh date, do you know what's the difference between "now()" and this M language you post?

The equivalent of Excel's NOW() function is DateTime.LocalNow(). Just be aware that when you publish to a server, that it will return the UTC time, not your local time, as your server is most likely in a different time zone. There is also a DateTime.UtcNow() function that could be useful for you if you need to start converting from one time to another.

Hello- has there been any update on the easiest way to time stamp reports?

Hi Lisa,

We can now pin a tile to a dashboard, and then simply edit the tile properties to show the time last refreshed. That's by far the simplest.

Thanks so much for sharing 🙂

Still working on this time zone issue. Would highly appreciate it if someone could help me understand why the PowerBI service and desktop show two different timings. Is there a DAX function where the base datetime can be stored as UTC and then adjustment according to timezone can be made to it?

Also i was trying UTCNOW() - 6/24 for Central time , but same issue happens when we go to power bi service.

Does anyone know why? Maybe the power bi source has a different "source" or database from which it pulls date time information. If so how do we integrate both of them?

Hi Vin,

There issue you run into with LocalNow() is that the server is in one time zone, and you are in another. Depending on what data centre you hit, that makes it very volatile. There is a DateTime.UtcNow() function as well, which should return the correct value worldwide, but then trying to determine the offset can be kind of painful as daylight savings time can shift the time zone offset differently during the year.

Thanks for sharing it, really interesting.

i've found new way, actually i'm using mysql on premise server, and connected to PowerBI using personal gateway mode.

i create view table on database as source which is execute get datetime value on my sql syntaks.

i put this value on PowerBI Dashboard with card,

so when auto schedule refresh done (dataset), it automatically update Last Refresh Date. it can solved time zone issue.

Thank you

You also use the UTCNOW function and apply the time differential.

In our case all times are EST (UTC -5)

UTCNOW() - 5 / 24 will give you current time in EST and it will be consistent between Desktop and Online

This is an excellent example. However, the site http://www.timeapi.org doesn't seem to work. So I found a workaround:

Press Get data > JSON > paste in this address: http://worldtimeapi.org/api/ip >

in the Power Query Editor, from the Convert ribbon, press Into Table > Select the Transform ribbon and press Transpose > Use First Row as Headers.

et, voila!

in the column [datetime] you should see the date and time of your refresh based on your location, which is determined via your ip address.

You can use this on the local report, however there is a caveat when using it with a published report. It didn't work for me, because my Gateway was not configured properly. It actually temporarily broke my connection to the Gateway, because it is configured to connect me to the server, and not to external sources. So keep that in mind.

@Rokas Klydžia thx!

Get Data --> Web

this worked for me, for some reason JSON was not picking up the site URL.

Thank you for the tip!

Hi

Thanks for this tip

Can I use the 'Last Run' timestamp fra this report, in another report....?

And how

Thanks

Hi Mads, once the query is loaded to the data model you should be able to use it on any report built against that data model (in that Power BI file.)

Ken,

Have you thought of parameterizing the values of the timezones and offset the UTC values? i wrote something about it here and it sounds relevant to the article:

https://gorilla.bi/power-query/last-refresh-datetime/

Cool suggestion Rick. Took me a minute to follow the logic, but yes, I like it!