This week’s post was inspired by a question in my Power Query help forum. The poster has a set up data, and needs to keep the most recent entry for each person from a list of data.

Background

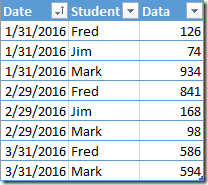

I never saw the user’s real data, but instead mocked up this sample, which you can download here:

Obviously it’s fairly simple, and the key thing to recognize here is what we’re after. What the user needed was this:

As you can see, we want to keep the most recent entry only for each person. In the case of Fred and Mark that is Mar 31, but Jim didn’t have any activity for March, with his last entry being Feb 29. So how do we do it?

1st attempt to keep the most recent entry

I figured this was pretty easy, so advised the poster to do the following:

- Pull the data into Power Query

- Sort the Date column in Descending order

- Use the Remove Duplicates command on the Student column

- Give the query a name (I called mine “Unbuffered” for reasons that will become clear)

- Load it to the worksheet

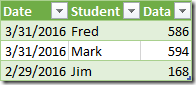

Easy enough, right? Except that we actually got this:

Huh? What the heck is going on? I tried changing the dates to text in an attempt to steal away Power Query’s ability to sort based on dates. (Okay, it was a shot in the dark, and it didn’t work.)

As it turns out, the “Table.Distinct” command that is used to remove duplicates IGNORES previous sorts, going back to the original data sort order. I’ll admit that this completely shocks me, and is not at all what I’d expect.

So how do you keep the most recent entry?

There’s a few potential ways to deal with this:

- Sort the data before it comes into your query. This could potentially be done in a staging query, via a SQL sort command or some other method. The challenge is that this isn’t always practical (using that custom SQL query breaks query folding, right?)

- Issue some kind of command (like a group by) that creates a new table which is already sorted in the correct order. Again, this would work, but really seems unnecessary unless you have some other need to do so.

- Sort the table, then buffer it before removing duplicates.

Huh? “Buffer” it?

Using Table.Buffer() to help keep the most recent entry

I’m not a master of explaining Table.Buffer() (yet), but basically you can look at it like this: It pulls a copy of the table into memory, preventing Power Query from recalculating it. This can be super useful if you’re passing tables to functions, but in this case can help us lock down the previous query steps before applying the duplicate removal. When the query state is buffered, that is the “most recent” copy that Power Query will revert to.

Rather than adjust the previous query, here’s what I did in order to create the working solution:

- Duplicated the “Unbuffered” query

- Renamed the new query, calling it “Buffered”

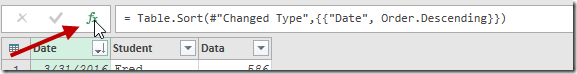

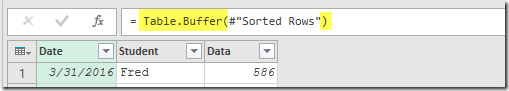

- Selected the “Sorted Rows” step we generated (just before the “Removed Duplicates” step

- Clicked the fx icon in the formula bar

As I’ve mentioned a few times on this blog, this creates a new step that simply refers to the previous query step. I then just wrapped the text for the new Custom1 step in the formula bar with Table.Buffer():

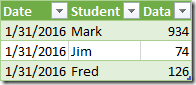

And when you hit Close & Load, you get a different result that our previous query… you get the result we actually wanted:

So what’s happening here?

First, just to be clear (before Bill or Imke call me out on this), inserting the new step wasn’t entirely necessary. I only did this to demonstrate the key difference in a distinct step of it’s own. I could have easily just wrapped the Sorted Rows step in Table.Buffer() and it would have worked fine. 🙂

The key difference here is that the Table.Distinct() command we use the Removed Duplicates step will go now only go back so far as the buffered table. From the Excel user’s perspective, it’s kind of like we’ve been able to copy all the steps before this, and lock them in with a PasteSpecial command, and point Power Query to that version of the data instead of looking back at the original.

Cool! I’m going to use Table.Buffer everywhere!

Um… don’t. That’s actually a really bad idea.

Table.Buffer() needs to be used with a bit more care than that. Every time you buffer a table, it needs to be processed and written into memory. That takes resources. You only want to use this command when it makes sense. Some places where it does:

- When you need to lock down previous steps to prevent things being ignored, like in the case above

- When you want to pass a table to a function. If you don’t buffer it first, the table may get re-calculated/refreshed before being passed into the function. If you’re doing this for every row, that can be a lot of re-calculations. In this case it may make sense to Buffer the table, then send the buffered table into the function. Even though the buffering takes overhead, it only happens once, which can speed things up

Just also remember that the instant you buffer your table, you break any query folding ability, as the data is now in Excel’s memory space. Something that is worth consideration if you’re doing large operations against a database.

22 thoughts on “Keep The Most Recent Entry”

I ran into this issue and emailed Microsoft and had some issues explaining it to various people. I think I emailed a few PP/PQ experts and they weren't familiar with the issue.

In my case I'm appending numerous files together with millions of records and while I thought of buffering to fix this, it was unbearably slow. I let the query run for 30 minutes (it normally takes 20s) and canceled it. I just import all the records now and add a flag to remove repeats.

Good to see other people have run into the same problem, and it isn't just me.

Great, you have just replied to my question in the running total topic.

I also noticed that when merging a query with another one, you lose the initial sort. Will the table.buffer preserve the sort in merging ?

And what about list.buffer that imke/ bill used in some of their previous comments ?

Hi Ken 🙂

For me, this is a bug. Why? Try to add some dummy operation between "Sorted Rows" and "Removed Duplicates" (for example change the type of "Student" column to text or add indeks column or something else). Then you can remove duplicates without an issue, and the result of this operation will be correct.

This is what i meant when I said that this is a bug for me.

Regards 🙂

Ken - insert index also works -- thanks Drew.

I was playing around with your first method and just tooling around I tried using a index column and that seemed to work. Not sure why this works when sorting doesn't.

1. Pull the data into Power Query

2. Sort the Student column in Ascending order

3. Sort the Date column in Descending order

4. Add new Index column

5. Move Index column to first column

6. Use the Remove Duplicates command on the Student column

7. Remove Index column

8. Name Query as desired

9. Load it to the worksheet

Phil, yes buffering millions of records would cause issues, as you're loading them all into Excel's memory space, removing the opportunity to use any query folding against the database. And if you used Table.Buffer inside an "each" statement (i.e. for each table in a row), then this would cause even more buffering calls. Definitely don't want to do that.

Anthony, I'm not sure on that sort question. Will need to play with it. Re List.Buffer(), it works the same as Table.Buffer, just for lists, not tables.

I don't disagree with the bug comment, Bill, but I did find out that it has always worked this way. The question now is "can they fix it"? The team has to be worried if someone has actually relied on the behaviour of this bug, and accidentally turned it into a feature. 🙁

Re the changing data type to text... that didn't change anything for me. I didn't try the index column part though. Will need to have a go at that one.

Drew & Walt, thanks for commenting here. Will check that out.

I have changed your "Unbuffered" query to this below and for me works fine.

let

Source = Excel.CurrentWorkbook(){[Name="Table1"]}[Content],

#"Changed Type" = Table.TransformColumnTypes(Source,{{"Date", type date}, {"Student", type text}, {"Data", Int64.Type}}),

#"Sorted Rows" = Table.Sort(#"Changed Type",{{"Date", Order.Descending}}),

#"Changed Type2" = Table.TransformColumnTypes(#"Sorted Rows",{{"Student", type text}}),

#"Removed Duplicates" = Table.Distinct(#"Changed Type2", {"Student"})

in

#"Removed Duplicates"

I do not know why we get different result 🙁

Win 8.1, excel 2010 (x86), PQ ver. 2.32.4307.502

Regards

Building on Phil's earlier note above -- the PQ team were notified of the bug several months ago. It surfaced as part of our normal QC audit. "Insert Index" also scales well.

I just arrived to this post. At the office we use this technique: https://blog.crossjoin.co.uk/2015/05/11/nested-calculations-in-power-query/

Sort ascending or descending and then leave the number 1 if you want the first or the last record for each partition

Hi Ken,

what do you think about this code :

let

Source = Excel.CurrentWorkbook(){[Name="Table1"]}[Content],

#"Grouped Rows" = Table.Group(Source, {"Student"}, {{"Date", each List.Max([Date]), type datetime}, {"Details", each _, type table}}),

#"Expanded Details" = Table.ExpandTableColumn(#"Grouped Rows", "Details", {"Data", "Date"}, {"Details.Data", "Details.Date" }),

#"Added Custom" = Table.AddColumn(#"Expanded Details", "Custom", each [Details.Date]=[Date]),

#"Filtered Rows" = Table.SelectRows(#"Added Custom", each ([Custom] = true)),

#"Removed Columns" = Table.RemoveColumns(#"Filtered Rows",{"Details.Date", "Custom"})

in

#"Removed Columns"

Clever!

Actually just had to use this today, worked like a charm. Thanks Ken!

If I change type of Student to text prior to removing dupes, your first idea works fine.

let

Source = Excel.CurrentWorkbook(){[Name="Table1"]}[Content],

#"Sorted Rows" = Table.Sort(Source,{{"Date", Order.Descending}}),

#"Changed Type" = Table.TransformColumnTypes(#"Sorted Rows",{{"Student", type text}}),

#"Removed Duplicates" = Table.Distinct(#"Changed Type", {"Student"})

in

#"Removed Duplicates"

This seems a bit hokey, like relying on stuff to be just so so you can remove duplicates.

My first thought was to just group the data by person and still everything else into an AllRows table, then use Table.Max to pull out the maximum date from each table for each person.

=Table.Max([AllRows], "Date")

Then expand the record for Date and Data columns.

It requires more steps because you have some column rearranging and cleanup to do, but the steps are very logical.

I'll have to look at your other use cases for Table.Buffer. The documentation from MS is sparse on this to be sure.

"The team has to be worried if someone has actually relied on the behaviour of this bug, and accidentally turned it into a feature."

Ah well, that's why APIs have versions.

Maybe, Fred, but that's been the old standby. "Sorry, we can't fix it, because now people rely on this behaviour". Its unfortunate, but it's true. 🙁

Pingback: Nesting Multiple Sort Columns in Power BI | ezpbi.com | Power BI Portal

Just popping by to say that Leonid's solution above worked like a charm for me - I found that Table.Buffer() was just too inconsistent for any even remotely large datasets, and over relying on the brute force "remove duplicates" does just feel like a bit too much of a throw of the dice.

THANK YOU FOR THIS