Several years ago, when Power Pivot first hit the scene, I was talking to a buddy of mine about it. The conversation went something like this:

My Friend: “Ken, I’d love to use PowerPivot, but you don’t understand the database culture in my country. Our DB admins won’t let us connect directly to the databases, they will only email us text files on a daily, weekly or monthly basis.”

Me: “So what? Take the text file, suck it into PowerPivot and build your report. When they send you a new file, suck it into PowerPivot too, and you’re essentially building your own business intelligence system. You don’t need access to the database, just the data you get on a daily/weekly basis!”

My Friend: “Holy #*$&! I need to learn PowerPivot!”

Now, factually everything I said was true. Practically though, it was a bit more complicated than that. See, PowerPivot is great at importing individual files into tables and relating them. The issue here is that we really needed the data appended to an existing file, rather than related to an existing file. It could certainly be done, but it was a bit of a pain.

But now that we have Power Query the game changes in a big way for us; Power Query can actually import and append every file in a folder, and import it directly into the data model as one big contiguous table. Let’s take a look in a practical example…

Example Background

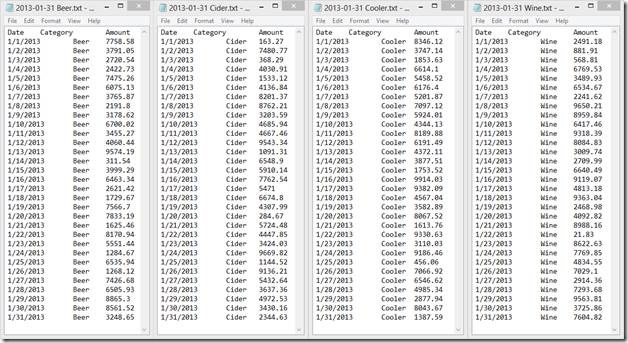

Assume we’ve got a corporate IT policy that blocks us from having direct access to the database, but our IT department sends us monthly listings of our sales categories. In the following case we’ve got 4 separate files which have common headers, but the content is different:

With PowerPivot it’s easy to link these as four individual tables in to the model, but it would be better to have a single table that has all the records in it, as that would be a better Pivot source setup. So how do we do it?

Building the Query

To begin, we need to place all of our files in a single folder. This folder will be the home for all current and future text files the IT department will ever send us.

Next we:

- Go to Power Query --> From File --> From Folder

- Browse to select our folder

- Click OK

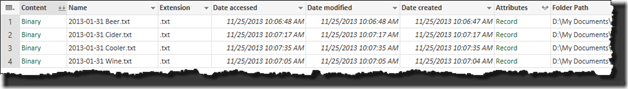

At this point we’ll be taken in to Power Query, and will be presented with a view similar to this:

Essentially it’s a list of all the files, and where they came from. The key piece here though, is the tiny little icon next to the “Content” header: the icon that looks like a double down arrow. Click it and something amazing will happen:

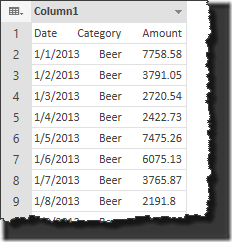

What’s so amazing is not that it pulled in the content. It’s that it has actually pulled in 128 rows of data which represents the entire content of all four files in this example. Now, to be fair, my data set here is small. I’ve also used this to pull in over 61,000 records from 30 csv files into the data model and it works just as well, although it only pulls the first 750 lines into Power Query’s preview for me to shape the actual query itself.

Obviously not all is right with the world though, as all the data came in as a single column. These are all Tab delimited files, (something that can be easily guessed by opening the source file in Notepad,) so this should be pretty easy to fix:

- Go to Split Column --> Delimited --> Tab --> OK

- Click Use First Row as Headers

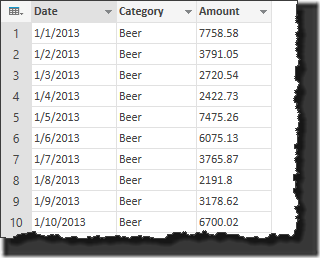

We should now have this:

Much better, but we should still do a bit more cleanup:

- Highlight the Date column and change the Data Type to Date

- Highlight the Amount column and change the Data Type to Number

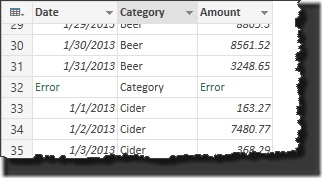

At this point it’s a good idea to scroll down the table to have a look at all the data. And it’s a good thing we did too, as there are some issues in this file:

Uh oh… errors. What gives there?

The issue is the headers in each text file. Because we changed the first column’s data type to Date, Power Query can’t render the text of “Date” in that column, which results in the error. The same is true of “Amount” which can’t be rendered as a number either since it is also text.

The manifestation as errors, while problematic at first blush, is actually a good thing. Why? Because now we have a method to filter them out:

- Select the Date column

- Click “Remove Errors”

We’re almost done here. The last things to do are:

- Change the name (in the Query Settings task pane at right) from “Query 1” to “Transactions”

- Uncheck “Load to worksheet” (in the bottom right corner)

- Check “Load to data model” (in the bottom right corner)

- Click “Apply & Close” in the top right

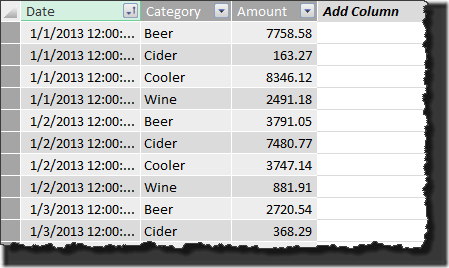

At this point, the data will all be streamed directly into a table in the Data Model! In fact, if we open PowerPivot and sort the dates from Oldest to Newest, we can see that we have indeed aggregated the records from the four individual files into one master table:

Implications

Because Power Query is pointed to the folder, it will stream in all files in the folder. This means that every month, when IT sends you new or updated files, you just need to save them into that folder. At that point refreshing the query will pull in all of the original files, as well as any you’ve added. So if IT sends me four files for February, then the February records get appended to the January ones that are already in my PowerPivot solution. If they send me a new file for a new category, (providing the file setup is 3 columns of tab delimited data,) it will also get appended to the table.

The implications of this are huge. You see, the issue that my friend complained about is not limited to his country at all. Every time I’ve taught PowerPivot to date, the same issue comes up from at least one participant. IT holds the database keys and won’t share, but they’ll send you a text file. No problem at all. Let them protect the integrity of the database, all you now need to do is make sure you receive your regular updates and pull them into your own purpose built solution.

Power Query and Power Pivot for the win here. That’s what I’m thinking. 🙂

7 thoughts on “From TXT files to BI solution”

What if the file you have already built is giant? like 30 million records, and you only need to append an additional 1 million records every month.

I don't want to start over and import 31 million records, that's time consuming.

Is it possible to do a true append? i.e keep the table/file I have already built, and jsut append the new records.

Hi Carlton,

Unfortunately, I don't think so. PowerQuery is all about querying the data source for records, and it doesn't take it on faith that your prior records have not changed. In fact, pretend for a moment that Excel had worksheets with 50 million rows and you loaded a 30 million row data set into PowerPivot. When you add another million rows there, and refresh PowerPivot, it also reaches back and refreshes the entire table, not just the new records. So I think it's a structural thing.

It would be interesting to mock up a quick test to see how long it takes to do a refresh on 30 million records though... maybe I'll give that a go.

Ken - fantastic post. Thanks for sharing your thoughts on this important scenario that we have been excited about enabling since we started working on Power Query.

Carlton - the feature you are asking for is something we call "incremental refresh". It is an important piece we'd like to enable at some point, but it is complicated. One of the simplifying assumptions we can make is that previously loaded data is immutable, and in scenarios such as log file mining, this would be perfectly fine. There are some basics we have to get out of the way first, such as getting the Excel Data Model to support incremental load and so on - but I hope this feature is just a matter of time.

Faisal Mohamood | Program Manager | Data Platform - Microsoft

Hi! Everyone have shared this great idea to help solve my problem; however, what I really need to do is mass import .txt files into Power Pivot. I have been told go do so via Power Query, but the problem is I need EACH txt file to import into PQ as separate datasets. 10 files = 10 datasets that when I import to PP will give me 10 sheets of data (for Excel). Can anyone help me?

Hi there,

Shouldn't be an issue... just create 10 separate routines to import them in Power Query. If you import the first by going to Power Query--> From File --> From Text, do your cleanup, then load it to the data model, that's your first done. Then just do the next 9.

Hi Ken,

Will it be possible to import a file of size 2 GB or larger into power query. That file would also need to be massaged later to get the right rows and columns. Please advise

Hi priyesh,

Microsoft's article on Power Query limitations can be found here: https://support.office.com/en-us/article/Power-Query-specifications-and-limits-5FB2807C-1B16-4257-AA5B-6793F051A9F4

In summary of what you'll find there, Power Query should be able to work with a 2GB file (providing you have sufficient RAM), although I think I'd want to be on 64 bit office to try it. (You could run out of memory in 32 bit.) In order to pull in that much data, however, you'd need to land it into the Power Pivot data model, unless you are processing it to reduce it below 1,048,576 rows (the Excel worksheet limit.)

Hope that helps!